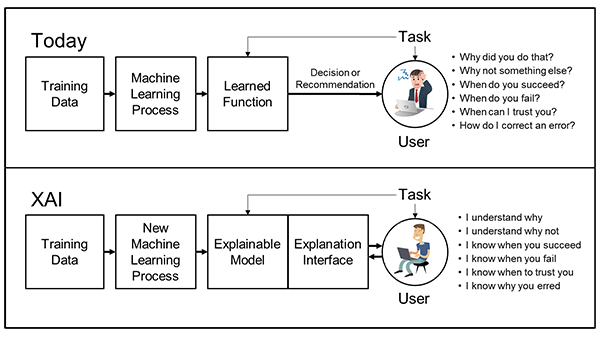

“Explainable AI (XAI) for Complex Models” is a fascinating research topic that addresses the challenge of making intricate and black-box AI models more transparent and interpretable. The goal of XAI is to provide insights into how these complex models make decisions, which is crucial for building trust, understanding model behavior, and ensuring ethical deployment. Here’s a breakdown of how you could structure a research paper on this topic:

1. Introduction:

Introduce the concept of Explainable AI (XAI) and its significance in the context of complex models.

Discuss the growth of AI models’ complexity and the need for transparency in decision-making.

2. Literature Review:

Survey existing methods for model explanation, including techniques for simpler models (e.g., decision trees, linear regression) and their limitations.

Highlight challenges in explaining complex models like deep neural networks, ensemble models, and black-box algorithms.

3. Challenges in Explaining Complex Models:

Discuss the inherent opacity of deep learning models due to their architecture and large number of parameters.

Address challenges related to high-dimensional data, non-linearity, and intricate feature interactions.

4. State-of-the-Art XAI Techniques:

Detail modern techniques developed for explaining complex models, such as:

LIME (Local Interpretable Model-agnostic Explanations)

SHAP (SHapley Additive exPlanations)

Integrated Gradients

Attention mechanisms and feature visualization

Layer-wise Relevance Propagation (LRP)

Describe how these techniques work and how they’ve been applied to various complex models.

5. Tailoring XAI for Complex Models:

Propose modifications or adaptations of existing XAI techniques to address the challenges posed by complex models.

Discuss potential hybrid approaches that combine different XAI methods to provide a more comprehensive understanding.

6. Quantifying Explanation Quality:

Introduce metrics to evaluate the quality of explanations provided by XAI methods.

Discuss the trade-off between model accuracy and explanation fidelity.

7. Case Studies and Applications:

Provide case studies where XAI techniques have been applied to complex models in real-world scenarios:

Healthcare: Interpretable medical image diagnoses using deep learning.

Finance: Explaining predictions of complex financial market models.

Autonomous vehicles: Understanding decisions of self-driving cars.

Natural language processing: Explaining language model-based recommendations.

8. Ethical Considerations:

Address the ethical implications of XAI, including its impact on user privacy, accountability, and potential biases in explanations.

9. Future Directions:

Discuss potential future developments and research directions in XAI for complex models:

Advancements in attention-based methods.

Combining XAI with causality and counterfactual explanations.

Applying XAI to emerging AI paradigms like quantum computing.

10. Conclusion:

Summarize the key findings and contributions of the paper.

Emphasize the importance of continued research in XAI, especially as AI models become more complex.