Home

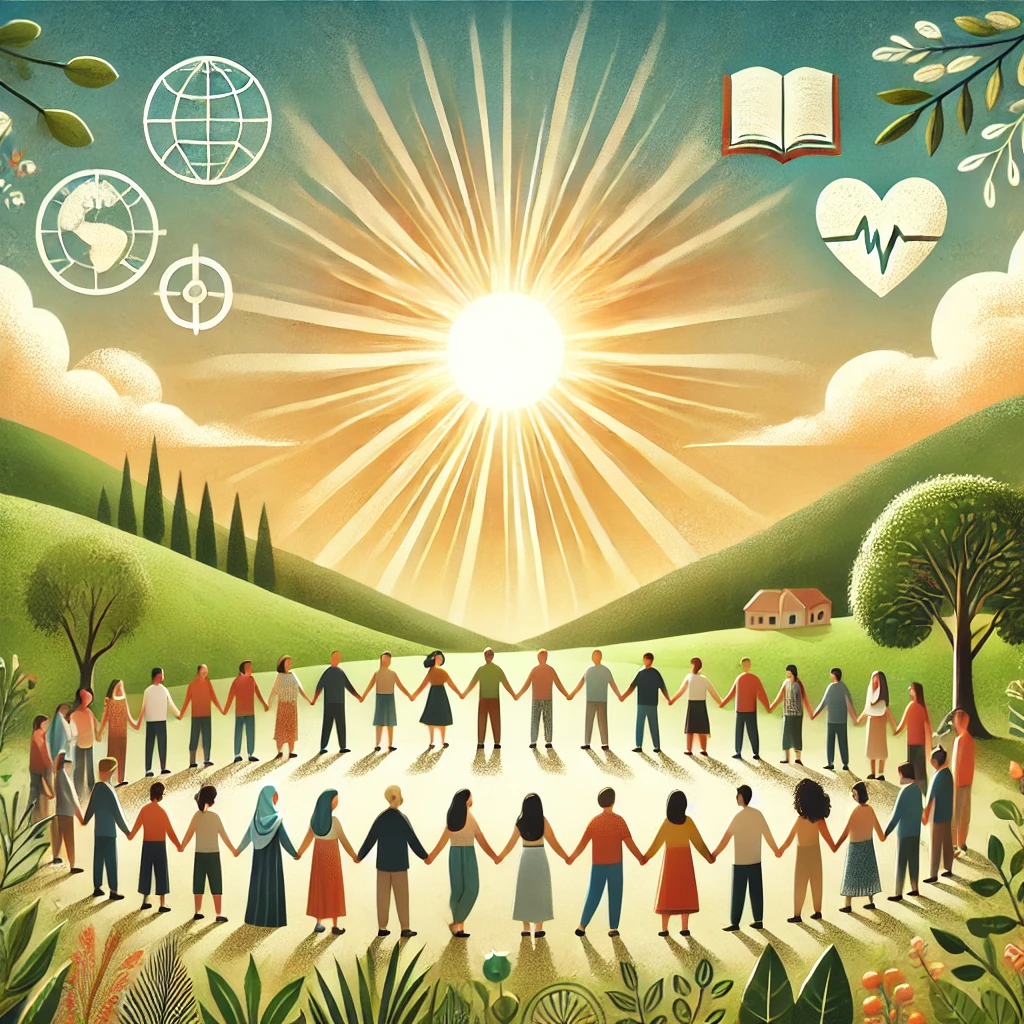

Community Learning Platform- Empowering Growth Through Sharing and demoing Knowledge every weekend - Saturday (11am -6pm)

About Us?

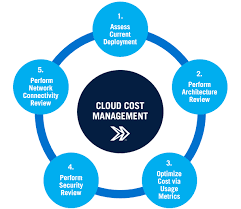

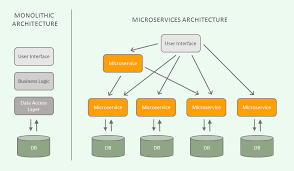

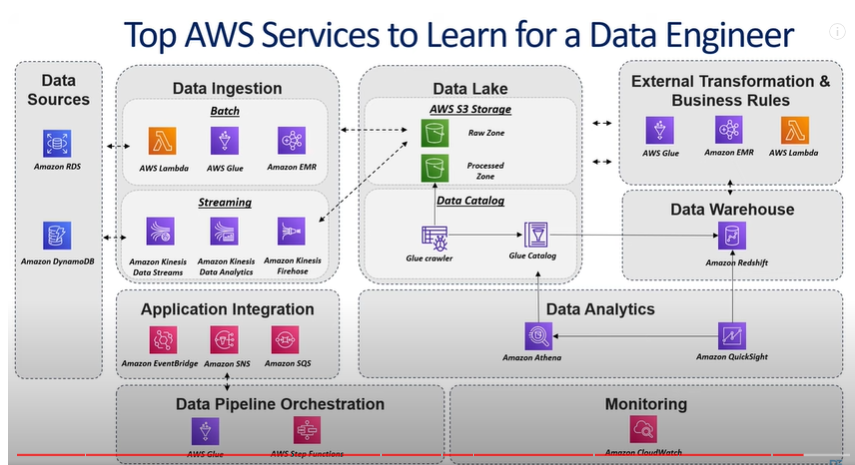

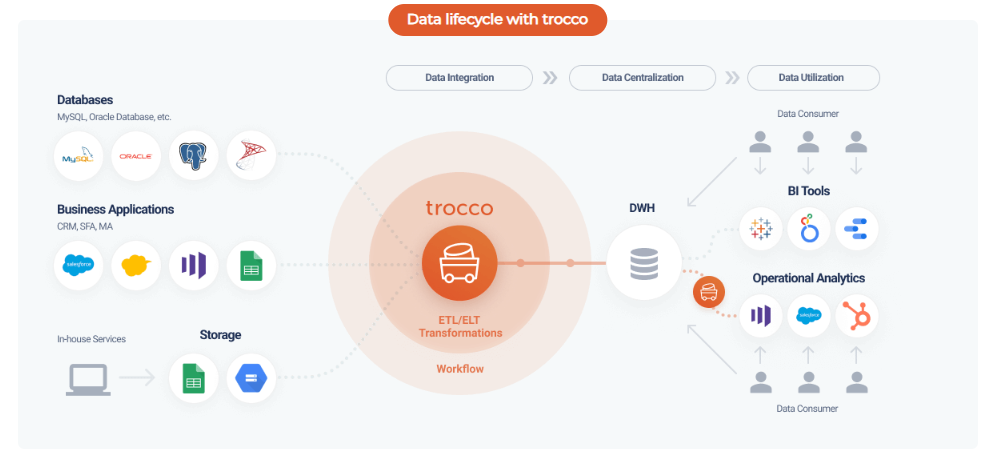

Knowledge Power Solution is a one-stop solution for those who are going to dive into the IT Industry we also serve live training on projects for hands-on experience for students and professionals, we are working with people to the enrichment of each other technical skills through shared experience come with the daily technical task. we are running a training company having the slogan “Learn-Practice-Lead”. We run our classes with limited students but ensure professional and career growth with long-term planning. We believe in actual work on a project to understand the customer needs and its business impact with proper governance on it. How we can use Technology Architecture to resolve this issue with MVP development with a low cost of operations?

We have trained 100+ candidates since 2018 in various domains being good people, they are supporting us in doing corporate social responsibility work. Running weekend meets up. Mentoring candidates. Planning a career with a backup plan in this volatile, uncertain, complex, and ambiguous (VUCA) World.

No One Software IT Training Institute in Pune

Join us today and take the first step towards unlocking your potential in the dynamic world of IT. We are committed to empowering you with the knowledge and expertise needed to thrive in the digital era. Choose KPS for IT training and skill development and embark on a journey to success!

Why Choose Us?

- Experienced and Certified Instructors for Exceptional Learning-Our courses are led by highly skilled instructors with extensive industry experience and recognized certifications. They bring real-world knowledge and expertise to the classroom, ensuring you receive top-notch training.

- Hands-on Practical Projects for Real-Life Expertise-At KPS, we believe in the power of learning by doing. Throughout our programs, you’ll engage in hands-on practical projects that simulate real-life scenarios. This approach hones your skills and prepares you to confidently tackle challenges in the IT field.

- Embrace Flexibility in Your Learning Journey-Understanding that everyone’s schedule is unique, we offer flexible learning options. Whether you prefer the convenience of online courses or the traditional classroom setting, we’ve got you covered.

- Unlock Exciting Opportunities with Job Placement Assistance-Your success is our priority. We provide dedicated job placement assistance to help you kickstart or advance your career in the IT industry. Our strong network of industry connections opens doors to exciting opportunities.

- Elevate Your Career with Industry-Recognized Certifications-Upon completing our training programs, you’ll earn industry-recognized certifications that hold immense value in the job market. These certifications validate your skills and provide you with a competitive edge in your career journey.

Key Features of Our Training Services

Live Virtual Classes: Offer live virtual classes and webinars with industry experts to provide real-time learning opportunities, Q&A sessions, and networking possibilities.

Certifications and Badges: Offer industry-recognized certifications and badges upon course completion to add value to learners' resumes and showcase their expertise.

Live Projects and Internships: Collaborate with companies to provide learners with opportunities to work on real industry projects and internships, gaining practical experience.

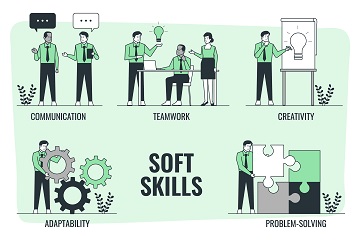

Soft Skills Training: Recognize the importance of soft skills and include training modules on communication, teamwork, and leadership to enhance the overall employability of learners.

Learning through Open-Source Contributions: Encourage learners to contribute to open-source projects, fostering real-world experience and a sense of community engagement.

What Our Students Say?